AI, analytics, and accountability: video and data privacy trends we can anticipate in 2023

While 2022 brought several challenges for the data privacy community, 2023 may look to be more optimistic from the perspective of protecting people’s data, particularly their visual data.

The benefits of AI systems and analytics have become increasingly apparent as a means of improving business, as well as entertainment for the public. The additional spotlight on these systems will also encourage regulation and systems of accountability for how they are used.

We can also expect the continued wave of data protection legislation, especially protections centring under-18s, as recent data breaches and research studies have highlighted how vulnerable a lot of people’s data is.

What trends can we expect in 2023?

AI-based video analytics will continue to become more widespread across sectors

Many organisations are keen to maximise the value of captured video data by implementing AI-based video analytics. Tech companies are rolling out easily accessible technology that allows controllers to gain a better understanding of their business operations. These systems have integrated video analytics systems in their cameras and help streamline the process of smarter surveillance in different industries.

In 2023, we can expect to see a continued increase in video analytics use, particularly in the retail and transportation sectors, as more video-service providers will start to offer them as part of their products. These systems are already useful in retail and transport to help manage shrinkage, facilitate smart retail, and conduct object-tracking in airports and stations. They also help reduce labour burdens on staff and security personnel and respond to real-life events more efficiently.

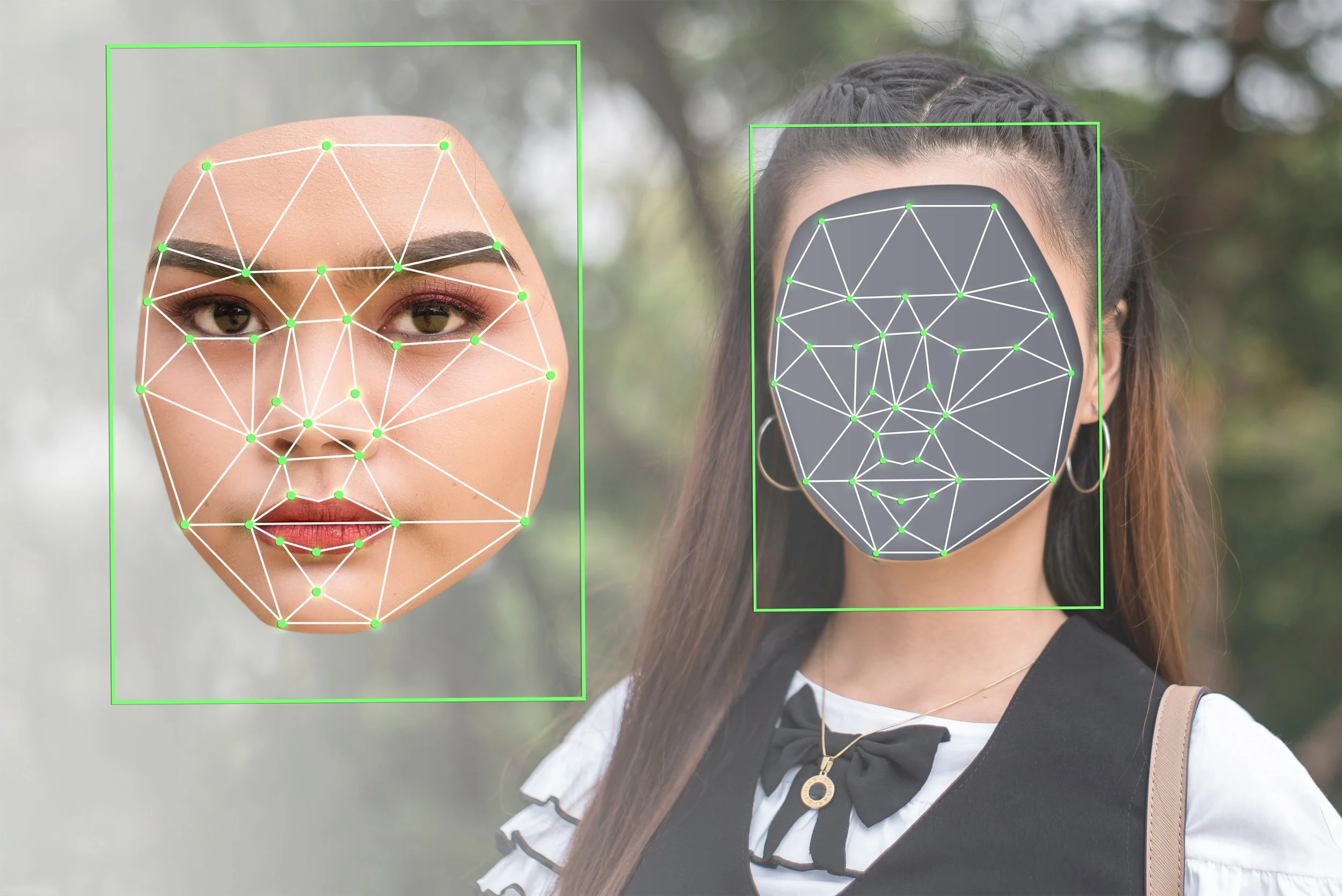

As these systems become more popular, proper adherence to data protection law and keeping data laws up-to-date with emerging technology becomes even more important - particularly when this includes the collection and processing of biometric data, i.e. facial recognition. Regulators will have to provide proper and specific guidance around data protection best practices, e.g. implementing automated anonymisation before processing to help protect data.

As with all AI-based systems, we can expect a push for these systems and their models to be regularly reviewed and updated to help tackle potential machine bias and inaccurate results.

An increase in AI-generated imagery and video, and further movement into the metaverse

In 2022, we saw the increased use of AI-based apps and programmes that can generate images and videos. Apps like Lensa allowed users to generate avatar-style images of themselves, while deepfakes continued to circulate across the Internet.

This year, we can expect a continued increase in the rollout of these types of applications and AI systems, particularly for recreational and entertainment use. There continues to be more investment from private companies into the metaverse and our digital footprints become larger. Privacy considerations will also be centred in this conversation: such as data ownership rights, collection of sensitive biometric data, user-to-user privacy, protection of minors, and lack of specific legislation.

Lawmakers and regulators will also have to consider how existing data protection legislation applies to the metaverse, or even create new legislative guidelines to regulate this space. Online platforms will also have to have clear and understandable data privacy policies about how they collect and use users’ data. While everything may not be wholly hashed out, we can hope to see some progress on this over the next year.

More regulation of AI systems

AI is rapidly evolving and becoming more widely distributed, particularly AI systems that utilise biometric data, e.g. facial-recognition and emotion-recognition systems. While these systems can be innovative and useful, they also bring privacy risks for consumers and require a vast amount of personal data. As these systems are susceptible to bias and inaccuracies, they have the potential to be dangerous or discriminatory to different groups in society and so there is a continued effort to ensure these systems are designed and used ethically and safely.

As a result, this year we can expect to see more stringent and targeted legislation to regulate the production, distribution, and use of AI systems, especially those that pose the greatest risks.

We have already seen more of an effort from regulators to be more proactive in supervising the development and use of AI. Last year, the ICO released guidance on AI and personal data, including recommendations for how to reduce risks, improve security, and make systems more explainable.

This year, we can expect 2023 to see pushback from the public and regulators, to place regulatory obligations on developers of these systems to ensure they protect data and are safe - particularly with regard to law enforcement’s use of facial recognition technology. For one, we hope to expect consolidation of the highly anticipated EU AI Act which, among other things, will look to regulate “high-risk systems” as well as put limitations (and in some cases) bans on live-facial recognition and social scoring. Similarly, in the US, there are movements towards an “AI Bill of Rights” to regulate these systems and ensure they work for people.

More action to address children’s privacy and protection online

Children’s online privacy and data rights look to be at the forefront of the agenda this year, with regulators across jurisdictions placing more emphasis on demanding accountability.

Children are increasingly active online, and in turn, their personal data, including visual data, is vulnerable to being accessed, breached, or sold.

Over the last year, we have learned more about the potentially detrimental effects social media and the Internet can have on children and their mental health, as well as their safety. The ICO has warned about the importance of children’s online safety, ed-tech websites have come under scrutiny for how they handle children’s data, and Tech Giants like Meta have been handed large fines for GDPR breaches relating to children’s data.

In 2022, there were efforts to try and address this - California last year passed the California Age-Appropriate Design Code Act which aims to protect children’s activity online and regulate online services likely to be accessed by under-18s.

In 2023, we hopefully look to see further movement on the UK’s Online Safety Bill to make online platforms more responsible for the safety of their users, especially young people. Particularly as apps like Instagram, TikTok and Snapchat capture tonnes of children’s visual data, there is an emphasis on these platforms being held accountable for how they collect, manage, and protect that data, as well as their users’ interactions on these platforms.

Data privacy legislation will continue to be a hot-button issue for different countries

2022 once again saw a lot of positive movement for data protection legislation, with more countries and US states setting bills in motion. In Australia, the largescale breach of the country’s second-largest telecom provider, Optus, sparked lawmakers to introduce the Privacy Legislation Amendment Bill to help hold companies more accountable for data breaches. Indonesia passed the Personal Data Protection Bill, while India updated its Personal Data Protection Bill for the newer Digital Personal Data Protection Bill.

In 2023, we can hope to see progress on the proposed American Data Privacy and Protection Act (ADPPA) which would be the first of its kind in the US, introducing comprehensive data protection obligations across all US states. Additionally, we can perhaps anticipate an updated version of Privacy Shield in 2023, allowing for the free flow of cross-border data between the US and the EU.

In the UK, we also may expect to gain some better insight into the future of GDPR. In 2022, the government signalled a possible scrapping of the existing UK GDPR and replacement with a new data privacy system.

With all this, consumer trust will continue to become more of a priority for businesses. Often, privacy may feel detrimental for businesses that wish to collect as much data as possible - privacy, in turn, can sometimes be viewed as a hurdle. However, we can expect to see a continued re-framing of the conversation into one of consumer trust and an emphasis that data privacy should not be viewed as a hindrance or a “nice-to-have” but rather, essential for maintaining consumer trust and maintaining business. Our visual data will continue to be more accessed than ever before and so we need assurances that it is being properly collected, used, and shared.